1,下载spark

# git clone https://github.com/apache/spark.git # git checkout branch-2.4

2,配置repo

<repository> <id>mavenc</id> <name>cloudera Repository</name> <url>http://central.maven.org/maven2/</url> </repository> <repository> <id>central</id> <name>Maven Repository</name> <url>https://repo.maven.apache.org/maven2</url> <releases> <enabled>true</enabled> </releases> <snapshots> <enabled>false</enabled> </snapshots> </repository> <repository> <id>cloudera</id> <name>cloudera repository</name> <url>https://repository.cloudera.com/artifactory/cloudera-repos/</url> </repository>

由原来的1个,换成3个

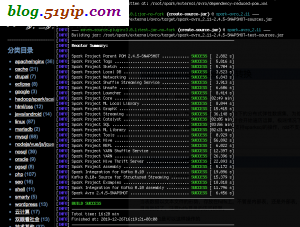

3,重新编译

# ./build/mvn -Pyarn -Phive -Phive-thriftserver -Phadoop-3.0 -Dhadoop.version=3.0.0-cdh6.3.1 -DskipTests clean package -e

注意:hive2.1.1结合spark2.4是编译不通过的,不兼容。

4,编译过程中,遇到的问题

问题一,

[WARNING] The requested profile "hadoop-3.0" could not be activated because it does not exist.

解决办法:

<repository> <id>cloudera</id> <name>cloudera repository</name> <url>https://repository.cloudera.com/artifactory/cloudera-repos/</url> </repository>

问题二,

[INFO] Using zinc server for incremental compilation

[warn] Pruning sources from previous analysis, due to incompatible CompileSetup.

[info] Compiling 2 Scala sources and 6 Java sources to /root/spark/common/tags/target/scala-2.11/classes...

[error] Cannot run program "javac": error=2, 没有那个文件或目录

解决办法:

export PATH=$JAVA_HOME/bin:$PATH

问题三,

[INFO] Using zinc server for incremental compilation

[ERROR] Failed to construct terminal; falling back to unsupported

java.lang.NumberFormatException: For input string: "0x100"

解决办法:

export TERM=xterm-color

问题四,

Exception in thread "main" java.lang.NoSuchFieldError: HIVE_STATS_JDBC_TIMEOUT

解决办法:

# vim sql/hive/src/main/scala/org/apache/spark/sql/hive/HiveUtils.scala ConfVars.METASTORE_AGGREGATE_STATS_CACHE_MAX_WRITER_WAIT -> TimeUnit.MILLISECONDS, ConfVars.METASTORE_AGGREGATE_STATS_CACHE_MAX_READER_WAIT -> TimeUnit.MILLISECONDS, ConfVars.HIVES_AUTO_PROGRESS_TIMEOUT -> TimeUnit.SECONDS, ConfVars.HIVE_LOG_INCREMENTAL_PLAN_PROGRESS_INTERVAL -> TimeUnit.MILLISECONDS, //ConfVars.HIVE_STATS_JDBC_TIMEOUT -> TimeUnit.SECONDS, //ConfVars.HIVE_STATS_RETRIES_WAIT -> TimeUnit.MILLISECONDS, ConfVars.HIVE_LOCK_SLEEP_BETWEEN_RETRIES -> TimeUnit.SECONDS, ConfVars.HIVE_ZOOKEEPER_SESSION_TIMEOUT -> TimeUnit.MILLISECONDS, ConfVars.HIVE_ZOOKEEPER_CONNECTION_BASESLEEPTIME -> TimeUnit.MILLISECONDS, ConfVars.HIVE_TXN_TIMEOUT -> TimeUnit.SECONDS,

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/hadoop/2329.html