对于习惯了sql的开发同学来说,写sql肯定比较用map,filter内在算法因子要顺手的多。

一,sbt项目

1,build.sbt配置

name := "scalatest"

version := "0.1"

scalaVersion := "2.11.8"

libraryDependencies += "com.alibaba" % "fastjson" % "1.2.49"

libraryDependencies ++= Seq(

"org.apache.spark" % "spark-core_2.11" % "2.3.0",

"org.apache.spark" % "spark-hive_2.11" % "2.3.0",

"org.apache.spark" % "spark-sql_2.11" % "2.3.0"

)

spark-core,spark-hive,spark-sql的版本,根据自己的实际情况来定。

2,测试代码

package ex

import org.apache.spark.sql.SparkSession

object tank {

var data = ""

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().

master("local")

// .config("spark.sql.hive.thriftServer.singleSession", true)

.enableHiveSupport()

.appName("tanktest").getOrCreate()

import spark.implicits._

val tanktest:String = "create table `tank_test` ("+

"`creative_id` string,"+

"`category_name` string,"+

"`ad_keywords` string,"+

"`creative_type` string,"+

"`inventory_type` string,"+

"`gender` string,"+

" `source` string,"+

" `advanced_creative_title` string,"+

" `first_industry_name` string,"+

" `second_industry_name` string)"+

" ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' LINES TERMINATED BY '\n' STORED AS TEXTFILE";

//获取参数

for(i <- args.indices by 2){

args(i) match {

case "--data" => data=args(i+1);

case _ => "error";

}

}

spark.sql(tanktest)

spark.sql(s"LOAD DATA LOCAL INPATH '$data/creat_partd' INTO TABLE tank_test")

spark.sql("select count(*) as total from tank_test").show()

}

}

如果报:

Exception in thread "main" org.apache.spark.sql.AnalysisException: Hive support is required to CREATE Hive TABLE (AS SELECT);

解决办法有二种:

//以下二步,二选一

// .config("spark.sql.hive.thriftServer.singleSession", true)

.enableHiveSupport()

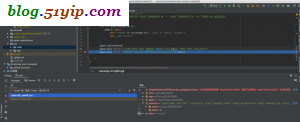

3,idea debug配置

4,调式结果

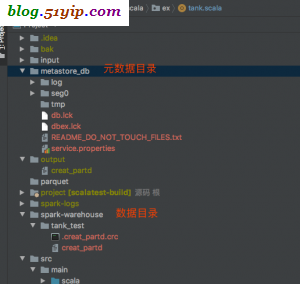

注意:本地调试,并没有连接远程的hive。也没有设置hive.metastore.warehouse.dir,所有元数据目录,以及数据目录,都在当前项目目录下了。

二,mvn项目

1,pom.xml添加以下内容

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.3.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.11</artifactId>

<version>2.3.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>2.3.0</version>

</dependency>

其他的,根上面一样。这种本地开发,只能让代码中的sql运行起来,没有数据。数据只能从线上copy,下一篇,会说一说,本地spark-sql怎么连接线上的hive。

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/hadoop/2333.html