phoenix是构建在Hbase之上,使用标准的SQL操作Hbase,可以做联机事务处理,拥有低延迟的特性。

cdh的安装,请参考:cloudera cdh 6.3 安装配置

impala可以整合Hbase,但是不支持delete,upsert。如果想要impala的delete,upsert,存储要用kudu。请参考:impala与hbase整合

hive可以整合Hbase,受限于hive自己的原因,和hbase并不能配合的很好。请参考:hive与hbase整合

一,下载,并安装phoenix

# wget http://archive.apache.org/dist/phoenix/apache-phoenix-5.0.0-HBase-2.0/bin/apache-phoenix-5.0.0-HBase-2.0-bin.tar.gz # tar zxvf apache-phoenix-5.0.0-HBase-2.0-bin.tar.gz # mv apache-phoenix-5.0.0-HBase-2.0-bin /bigdata/phoenix # scp -r /bigdata/phoenix bigserver2:/bigdata/ # scp -r /bigdata/phoenix bigserver3:/bigdata/ # scp -r /bigdata/phoenix bigserver4:/bigdata/ # scp -r /bigdata/phoenix bigserver5:/bigdata/

二,配置phoenix

1,配置环境变量

# vim ~/.bashrc //追加 export PHOENIX_HOME=/bigdata/phoenix export PATH=$PHOENIX_HOME/bin:$PATH # source ~/.bashrc

2,copy phoenix jar包到hbase lib中

# cp phoenix-5.0.0-HBase-2.0-* /opt/cloudera/parcels/CDH/lib/hbase/lib # scp phoenix-5.0.0-HBase-2.0-* bigserver1:/opt/cloudera/parcels/CDH/lib/hbase/lib # scp phoenix-5.0.0-HBase-2.0-* bigserver2:/opt/cloudera/parcels/CDH/lib/hbase/lib # scp phoenix-5.0.0-HBase-2.0-* bigserver3:/opt/cloudera/parcels/CDH/lib/hbase/lib # scp phoenix-5.0.0-HBase-2.0-* bigserver4:/opt/cloudera/parcels/CDH/lib/hbase/lib

3,配置服务端(hbase)hbase-site.xml

<property>

<name>hbase.table.sanity.checks</name>

<value>false</value>

</property>

<property>

<name>phoenix.schema.isNamespaceMappingEnabled</name>

<value>true</value>

</property>

<property>

<name>phoenix.schema.mapSystemTablesToNamespace</name>

<value>true</value>

</property>

配置完成后,要重启cdh。

4,配置客户端(phoenix)hbase-site.xml

# cd /bigdata/phoenix/bin

# cp hbase-site.xml hbase-site.xml_bak20200119

# cp /etc/hbase/conf/hbase-site.xml ./

# vim hbase-site.xml //添加以下内容

<property>

<name>hbase.table.sanity.checks</name>

<value>false</value>

</property>

<property>

<name>phoenix.schema.isNamespaceMappingEnabled</name>

<value>true</value>

</property>

<property>

<name>phoenix.schema.mapSystemTablesToNamespace</name>

<value>true</value>

</property>

# scp /bigdata/phoenix/bin/hbase-site.xml bigserver2:/bigdata/phoenix/bin/

# scp /bigdata/phoenix/bin/hbase-site.xml bigserver3:/bigdata/phoenix/bin/

# scp /bigdata/phoenix/bin/hbase-site.xml bigserver4:/bigdata/phoenix/bin/

# scp /bigdata/phoenix/bin/hbase-site.xml bigserver5:/bigdata/phoenix/bin/

服务端和客户端的配置,最好是一样。不然有可能会报以一下错误。

Error: ERROR 726 (43M10): Inconsistent namespace mapping properties. Ensure that config phoenix.schema.isNamespaceMappingEnabled is consistent on client and server. (state=43M10,code=726)

java.sql.SQLException: ERROR 726 (43M10): Inconsistent namespace mapping properties. Ensure that config phoenix.schema.isNamespaceMappingEnabled is consistent on client and server.

at org.apache.phoenix.exception.SQLExceptionCode$Factory$1.newException(SQLExceptionCode.java:494)

at org.apache.phoenix.exception.SQLExceptionInfo.buildException(SQLExceptionInfo.java:150)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.checkClientServerCompatibility(ConnectionQueryServicesImpl.java:1318)

三,测试phoenix

1,查看hive与hbase整合

hive> select * from hive_hbase_test; OK 1000320190925 1 tank man 1000320190926 2 tank1 man 1000320190927 3 tank2 man1 Time taken: 0.326 seconds, Fetched: 3 row(s) hive> show create table hive_hbase_test; OK CREATE EXTERNAL TABLE `hive_hbase_test`( `key` bigint COMMENT '', `id` bigint COMMENT '', `name` string COMMENT '', `sex` string COMMENT '') ROW FORMAT SERDE 'org.apache.hadoop.hive.hbase.HBaseSerDe' STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES ( 'hbase.columns.mapping'=':key,login:id,login:username,info:sex', 'serialization.format'='1') TBLPROPERTIES ( 'hbase.table.name'='tank_ns:user', 'transient_lastDdlTime'='1578455335') Time taken: 0.186 seconds, Fetched: 15 row(s)

2,查看hbase的数据

hbase(main):003:0> list_namespace NAMESPACE default hbase tank_ns 3 row(s) Took 0.0244 seconds hbase(main):005:0* scan 'tank_ns:user' ROW COLUMN+CELL 1000320190925 column=info:sex, timestamp=1579424039575, value=man 1000320190925 column=login:_0, timestamp=1579424039575, value= 1000320190925 column=login:id, timestamp=1579424039495, value=1 1000320190925 column=login:username, timestamp=1579424039542, value=tank 1000320190926 column=info:sex, timestamp=1579424039733, value=man 1000320190926 column=login:_0, timestamp=1579424039733, value= 1000320190926 column=login:id, timestamp=1579424039633, value=2 1000320190926 column=login:username, timestamp=1579424039666, value=tank1 1000320190927 column=info:sex, timestamp=1579424040312, value=man1 1000320190927 column=login:_0, timestamp=1579424040312, value= 1000320190927 column=login:id, timestamp=1579424039771, value=3 1000320190927 column=login:username, timestamp=1579424039800, value=tank2 3 row(s) Took 0.0656 seconds

3,创建phoenix表

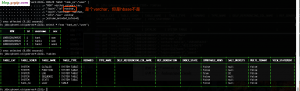

[root@bigserver4 bin]# sqlline.py bigserver4:2181 Setting property: [incremental, false] Setting property: [isolation, TRANSACTION_READ_COMMITTED] issuing: !connect jdbc:phoenix:bigserver4:2181 none none org.apache.phoenix.jdbc.PhoenixDriver Connecting to jdbc:phoenix:bigserver4:2181 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/home/bigdata/phoenix/phoenix-5.0.0-HBase-2.0-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 20/01/19 15:28:25 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 20/01/19 15:28:27 WARN query.ConnectionQueryServicesImpl: Expected 5 system tables but found 6:[SYSTEM.CATALOG, SYSTEM.FUNCTION, SYSTEM.LOG, SYSTEM.MUTEX, SYSTEM.SEQUENCE, SYSTEM.STATS] Connected to: Phoenix (version 5.0) Driver: PhoenixEmbeddedDriver (version 5.0) Autocommit status: true Transaction isolation: TRANSACTION_READ_COMMITTED Building list of tables and columns for tab-completion (set fastconnect to true to skip)... 133/133 (100%) Done Done sqlline version 1.2.0 0: jdbc:phoenix:bigserver4:2181> CREATE TABLE "user" ( //创建user表,没有挂载schema . . . . . . . . . . . . . . . .> "ROW" varchar primary key, . . . . . . . . . . . . . . . .> "login"."id" bigint, . . . . . . . . . . . . . . . .> "login"."name" varchar, . . . . . . . . . . . . . . . .> "info"."sex" varchar . . . . . . . . . . . . . . . .> )column_encoded_bytes=0; No rows affected (2.479 seconds) 0: jdbc:phoenix:bigserver4:2181> !tables +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+----------------+ | TABLE_CAT | TABLE_SCHEM | TABLE_NAME | TABLE_TYPE | REMARKS | TYPE_NAME | SELF_REFERENCING_COL_NAME | REF_GENERATION | INDEX_STATE | IMMUTABLE_ROWS | SALT_BUCKETS | MULTI_TENANT | VIEW_STATEMENT | +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+----------------+ | | SYSTEM | CATALOG | SYSTEM TABLE | | | | | | false | null | false | | | | SYSTEM | FUNCTION | SYSTEM TABLE | | | | | | false | null | false | | | | SYSTEM | LOG | SYSTEM TABLE | | | | | | true | 32 | false | | | | SYSTEM | SEQUENCE | SYSTEM TABLE | | | | | | false | null | false | | | | SYSTEM | STATS | SYSTEM TABLE | | | | | | false | null | false | | | | | user | TABLE | | | | | | false | null | false | | +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+----------------+ 0: jdbc:phoenix:bigserver4:2181> select * from "user"; //无数据 +------+-----+-------+------+ | ROW | id | name | sex | +------+-----+-------+------+ +------+-----+-------+------+ No rows selected (0.052 seconds) 0: jdbc:phoenix:bigserver4:2181> create schema "tank_ns"; //创建schema,根hbase的namespace差不多,好比是mysql的数据库名 No rows affected (0.014 seconds) 0: jdbc:phoenix:bigserver4:2181> CREATE TABLE "tank_ns"."user" ( . . . . . . . . . . . . . . . .> "ROW" varchar primary key, . . . . . . . . . . . . . . . .> "login"."id" bigint, . . . . . . . . . . . . . . . .> "login"."name" varchar, . . . . . . . . . . . . . . . .> "info"."sex" varchar . . . . . . . . . . . . . . . .> )column_encoded_bytes=0; 4 rows affected (8.521 seconds) //创建成功 0: jdbc:phoenix:bigserver4:2181> !tables +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+----------------+ | TABLE_CAT | TABLE_SCHEM | TABLE_NAME | TABLE_TYPE | REMARKS | TYPE_NAME | SELF_REFERENCING_COL_NAME | REF_GENERATION | INDEX_STATE | IMMUTABLE_ROWS | SALT_BUCKETS | MULTI_TENANT | VIEW_STATEMENT | +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+----------------+ | | SYSTEM | CATALOG | SYSTEM TABLE | | | | | | false | null | false | | | | SYSTEM | FUNCTION | SYSTEM TABLE | | | | | | false | null | false | | | | SYSTEM | LOG | SYSTEM TABLE | | | | | | true | 32 | false | | | | SYSTEM | SEQUENCE | SYSTEM TABLE | | | | | | false | null | false | | | | SYSTEM | STATS | SYSTEM TABLE | | | | | | false | null | false | | | | | user | TABLE | | | | | | false | null | false | | | | tank_ns | user | TABLE | | | | | | false | null | false | | +------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+----------------+

报错

0: jdbc:phoenix:bigserver4:2181> select * from "tank_ns"."user";

Error: ERROR 201 (22000): Illegal data. Expected length of at least 8 bytes, but had 5 (state=22000,code=201)

java.sql.SQLException: ERROR 201 (22000): Illegal data. Expected length of at least 8 bytes, but had 5

at org.apache.phoenix.exception.SQLExceptionCode$Factory$1.newException(SQLExceptionCode.java:494)

at org.apache.phoenix.exception.SQLExceptionInfo.buildException(SQLExceptionInfo.java:150)

报错原因是:hbase中的整形,不能被phoenix识别。但是通过phoenix创建的整形字段是可以的。所以创建phoenix表,映射hbase时,整形要换varchar

CREATE TABLE "tank_ns"."user" (

"ROW" varchar primary key,

"login"."id" varchar,

"login"."username" varchar,

"info"."sex" varchar

)column_encoded_bytes=0;

4,删除user表,重新建表

0: jdbc:phoenix:bigserver4:2181> drop table "tank_ns"."user"; hbase> create 'tank_ns:user','login','info' hbase> put 'tank_ns:user','1000320190925','login:id','1' hbase> put 'tank_ns:user','1000320190925','login:username','tank' hbase> put 'tank_ns:user','1000320190925','info:sex','man' hbase> put 'tank_ns:user','1000320190926','login:id','2' hbase> put 'tank_ns:user','1000320190926','login:username','tank1' hbase> put 'tank_ns:user','1000320190926','info:sex','man' hbase> put 'tank_ns:user','1000320190927','login:id','3' hbase> put 'tank_ns:user','1000320190927','login:username','tank2' hbase> put 'tank_ns:user','1000320190927','info:sex','man1'

注意:

通过phoenix删除表,对应的hbase中的表,也会被删除。

phoenix 区分大小写,切默认情况下会将小写转成大写,所以表名、列簇、列名需要用双引号。

phoenix 4.10 版本之后,在创建表映射时需要将 COLUMN_ENCODED_BYTES 置为 0。

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/hadoop/2358.html