不同的模式运行spark,动态资源分配的配置是不一样的,本文的模式是spark on yarn。

动态资源分配的意思是,需要的时候拿资源,不需要的时候,归还资源。

优点提高资源的利用率,还有不需要像静态模式那样,根据cpu数,内存数,指定Executor内存数,去算Executor了。

一,官方配置说明

Configuring the External Shuffle Service

To start the Spark Shuffle Service on each NodeManager in your YARN cluster, follow these instructions:

1,Build Spark with the YARN profile. Skip this step if you are using a pre-packaged distribution.

2,Locate the spark-<version>-yarn-shuffle.jar. This should be under $SPARK_HOME/common/network-yarn/target/scala-<version> if you are building Spark yourself, and under yarn if you are using a distribution.

3,Add this jar to the classpath of all NodeManagers in your cluster.

4,In the yarn-site.xml on each node, add spark_shuffle to yarn.nodemanager.aux-services, then set yarn.nodemanager.aux-services.spark_shuffle.class to org.apache.spark.network.yarn.YarnShuffleService.

5,Increase NodeManager's heap size by setting YARN_HEAPSIZE (1000 by default) in etc/hadoop/yarn-env.sh to avoid garbage collection issues during shuffle.

6,Restart all NodeManagers in your cluster.

上面要点都说了,但是不同的spark版本,配置有所不同

二,配置yarn-site.xml

# vim ${HADOOP_HOME}/etc/hadoop/yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle,spark_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.class</name>

<value>org.apache.spark.network.yarn.YarnShuffleService</value>

</property>

<property>

<name>spark.shuffle.service.port</name>

<value>7337</value>

</property>

同步该配置到所有的hadoop节点中

三,配置spark

1,配置spark-defaults.conf

# vim $SPARK_HOME/conf/spark-defaults.conf spark.master yarn spark.eventLog.enabled true spark.eventLog.dir hdfs://bigdata1/spark/logs spark.driver.memory 2g spark.executor.memory 2g spark.shuffle.service.enabled true spark.shuffle.service.port 7337 spark.dynamicAllocation.enabled true spark.dynamicAllocation.minExecutors 1 spark.dynamicAllocation.maxExecutors 6 spark.dynamicAllocation.schedulerBacklogTimeout 1s spark.dynamicAllocation.sustainedSchedulerBacklogTimeout 5s spark.submit.deployMode client spark.yarn.jars hdfs://bigdata1/spark/jars/* spark.serializer org.apache.spark.serializer.KryoSerializer

同步该配置到所有的spark节点中

主要参数说明:

| 参数名 | 默认值 | 描述 |

|---|---|---|

| spark.dynamicAllocation.executorIdleTimeout | 60s | executor空闲时间达到规定值,则将该executor移除。 |

| spark.dynamicAllocation.cachedExecutorIdleTimeout | infinity | 缓存了数据的executor默认不会被移除 |

| spark.dynamicAllocation.maxExecutors | infinity | 最多使用的executor数,默认为你申请的最大executor数 |

| spark.dynamicAllocation.minExecutors | 0 | 最少保留的executor数 |

| spark.dynamicAllocation.schedulerBacklogTimeout | 1s | 有task等待运行时间超过该值后开始启动executor |

| spark.dynamicAllocation.executorIdleTimeout | schedulerBacklogTimeout | 动态启动executor的间隔 |

| spark.dynamicAllocation.initialExecutors | spark.dynamicAllocation.minExecutors | 如果所有的executor都移除了,重新请求时启动的初始executor数 |

2,加载包spark-2.4.0-yarn-shuffle.jar

# ln -s ${SPARK_HOME}/yarn/spark-2.4.0-yarn-shuffle.jar ${HADOOP_HOME}/share/hadoop/yarn/lib

copy或者加软连都可以,这一步很重要,不同的spark版本,shuffle.jar包的位置是不一样的

3,重启hadoop集群

[root@bigserver2 conf]# jps 8880 DataNode 25505 HRegionServer 8979 JournalNode 24198 QuorumPeerMain 9078 NodeManager //nodemanager 16630 Jps 26473 Kafka [root@bigserver2 conf]# netstat -tpnl |grep 7337 tcp6 0 0 :::7337 :::* LISTEN 9078/java //只有nodemanager才有该进程

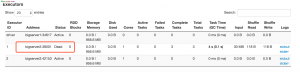

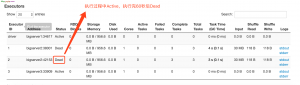

四,测试动态资源分配

1,命令行启动spark-sql,执行sql

对了一下正式环境,正式环境是静态资源分配,正式环境不会回收

2,在执行一次sql

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/hadoop/2213.html