sppark支持三种分布式部署方式,分别是standalone、spark on mesos和 spark on yarn。

standalone模式,即独立模式,自带完整的服务,可单独部署到一个集群中,无需依赖任何其他资源管理系统。

spark on mesos官方推荐这种模式(当然,原因之一是血缘关系)。正是由于spark开发之初就考虑到支持Mesos,Spark运行在Mesos上会比运行在yarn上更加灵活,更加自然。

spark on yarn这是一种最有前景的部署模式。但限于yarn自身的发展,目前仅支持粗粒度模式(Coarse-grained Mode)。这是由于yarn上的Container资源是不可以动态伸缩的,一旦Container启动之后,可使用的资源不能再发生变化,不过这个已经在yarn计划中了

1,spark on yarn 下载

# wget https://www.apache.org/dyn/closer.lua/spark/spark-2.4.0/spark-2.4.0-bin-hadoop2.7.tgz # tar zxvf spark-2.4.0-bin-hadoop2.7.tgz # mv spark-2.4.0-bin-hadoop2.7 /bigdata/spark

2,设置环境变量

# echo "export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.191.b12-1.el7_6.x86_64" >> ~/.bashrc # echo "export PATH=/bigdata/hadoop/bin:$PATH" >> ~/.bashrc # echo "export HADOOP_HOME=/bigdata/hadoop" >> ~/.bashrc # echo "export SPARK_HOME=/bigdata/spark" >> ~/.bashrc # echo "export LD_LIBRARY_PATH=/bigdata/hadoop/lib/native/" >> ~/.bashrc # source ~/.bashrc

前面写过几篇hadoop的文件,如果有重复就不用在设置了

3,设置日志目录,上传测试jar包

# hdfs dfs -mkdir /spark # hdfs dfs -mkdir /spark/logs # hdfs dfs -mkdir /spark/jars # cd /bigdata/spark/ # hdfs dfs -put jars/* /spark/jars/

在测试spark-submit的时候,就不用在上传了

2018-12-29 18:17:22 INFO Client:54 - Source and destination file systems are the same. Not copying hdfs://bigserver1:9000/spark/jars/JavaEWAH-0.3.2.jar

2018-12-29 18:17:22 INFO Client:54 - Source and destination file systems are the same. Not copying hdfs://bigserver1:9000/spark/jars/RoaringBitmap-0.5.11.jar

2018-12-29 18:17:22 INFO Client:54 - Source and destination file systems are the same. Not copying hdfs://bigserver1:9000/spark/jars/ST4-4.0.4.jar

4,配置spark-env.sh

# cp spark-env.sh.template spark-env.sh //添加以下内容 export SPARK_CONF_DIR=/bigdata/spark/conf export HADOOP_CONF_DIR=/bigdata/hadoop/etc/hadoop export YARN_CONF_DIR=/bigdata/hadoop/etc/hadoop export SPARK_HISTORY_OPTS="-Dspark.history.retainedApplications=3 -Dspark.history.fs.logDirectory=hdfs://bigserver1:9000/spark/logs

5,配置spark-defaults.conf

# cp spark-defaults.conf.template spark-defaults.conf //添加以下内容 spark.master yarn spark.eventLog.enabled true spark.eventLog.dir hdfs://bigserver1:9000/spark/logs spark.driver.cores 1 spark.driver.memory 512m spark.executor.cores 1 spark.executor.memory 512m spark.executor.instances 1 spark.submit.deployMode client spark.yarn.jars hdfs://bigserver1:9000/spark/jars/* spark.serializer org.apache.spark.serializer.KryoSerializer

6,配置slaves

# cp slaves.template slaves //添加以下内容 10.0.0.237 bigserver1 10.0.0.236 bigserver2 10.0.0.193 bigserver3

7,scp -r 同步/bigdata/spark文件夹到所有节点

8,测试

# cd /bigdata/spark # ./bin/run-example SparkPi 10 //输出结果中,有以下内容,说明测试OK Pi is roughly 3.1451911451911454

如果报以下错识:

2018-12-29 17:28:28 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform...

解决办法:

# echo "export LD_LIBRARY_PATH=$HADOOP_HOME/lib/native/" >> ~/.bashrc # source ~/.bashrc

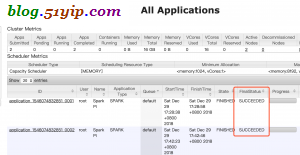

9,spark-submit 提交任务

# ./bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master yarn \ --deploy-mode cluster \ examples/jars/spark-examples_2.11-2.4.0.jar \ 500

10,spark-shell 提交任务

# ./spark-shell --master yarn Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). Spark context Web UI available at http://bigserver1:4040 Spark context available as 'sc' (master = yarn, app id = application_1546074832851_0007). Spark session available as 'spark'. Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 2.4.0 /_/ Using Scala version 2.11.12 (OpenJDK 64-Bit Server VM, Java 1.8.0_191) Type in expressions to have them evaluated. Type :help for more information. scala> :help All commands can be abbreviated, e.g., :he instead of :help. :edit <id>|<line> edit history :help [command] print this summary or command-specific help :history [num] show the history (optional num is commands to show) :h? <string> search the history :imports [name name ...] show import history, identifying sources of names :implicits [-v] show the implicits in scope :javap <path|class> disassemble a file or class name :line <id>|<line> place line(s) at the end of history :load <path> interpret lines in a file :paste [-raw] [path] enter paste mode or paste a file :power enable power user mode :quit exit the interpreter :replay [options] reset the repl and replay all previous commands :require <path> add a jar to the classpath :reset [options] reset the repl to its initial state, forgetting all session entries :save <path> save replayable session to a file :sh <command line> run a shell command (result is implicitly => List[String]) :settings <options> update compiler options, if possible; see reset :silent disable/enable automatic printing of results :type [-v] <expr> display the type of an expression without evaluating it :kind [-v] <expr> display the kind of expression's type :warnings show the suppressed warnings from the most recent line which had any

我没有装scala,但交互模式里的scala是可以用的,有可能新spark on yarn集成了。

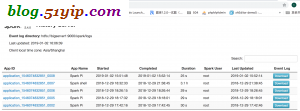

11,查看spark提交,历史记录

# cd /bigdata/spark # cd ./sbin/start-history-server.sh

任何一点,启动都可以

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/hadoop/2022.html