HBase是一个分布式的、面向列的开源数据库,该技术来源于 Fay Chang 所撰写的Google论文“Bigtable:一个结构化数据的分布式存储系统”。就像Bigtable利用了Google文件系统(File System)所提供的分布式数据存储一样,HBase在Hadoop之上提供了类似于Bigtable的能力。HBase是Apache的Hadoop项目的子项目。HBase不同于一般的关系数据库,它是一个适合于非结构化数据存储的数据库。另一个不同的是HBase基于列的而不是基于行的模式。

一,hbase集群说明

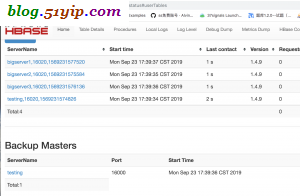

| 主机 | IP | 节点进程 |

|---|---|---|

| bigserver1 | 10.0.40.237 | Master、Zookeeper |

| bigserver2 | 10.0.40.222 | RegionServer、Zookeeper |

| bigserver3 | 10.0.40.193 | RegionServer |

| testing | 10.0.40.175 | Master-backup、RegionServer、Zookeeper |

二,ntp时间服务器安装配置

NTP

The clocks on cluster nodes should be synchronized. A small amount of variation is acceptable, but larger amounts of skew can cause erratic and unexpected behavior. Time synchronization is one of the first things to check if you see unexplained problems in your cluster. It is recommended that you run a Network Time Protocol (NTP) service, or another time-synchronization mechanism on your cluster and that all nodes look to the same service for time synchronization. See the Basic NTP Configuration at The Linux Documentation Project (TLDP) to set up NTP.

hbase集群中的服务器,时间相差不大,没什么问题。如果相差大,就会有不可预测的问题。

1,安装ntp

# yum install ntp

2,服务端(10.0.40.237)配置,其他的为客户端从服务器同步时间

# cat /etc/ntp.conf |awk '{if($0 !~ /^$/ && $0 !~ /^#/) {print $0}}'

driftfile /var/lib/ntp/drift

restrict default nomodify notrap nopeer noquery

restrict 127.0.0.1

restrict ::1

restrict 10.0.40.0 mask 255.255.255.0 nomodify notrap

server 210.72.145.44 perfer # 中国国家受时中心

server 202.112.10.36 # 1.cn.pool.ntp.org

server 59.124.196.83 # 0.asia.pool.ntp.org

restrict 210.72.145.44 nomodify notrap noquery

restrict 202.112.10.36 nomodify notrap noquery

restrict 59.124.196.83 nomodify notrap noquery

server 127.0.0.1 # local clock

fudge 127.0.0.1 stratum 10

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

disable monitor

3,客户端配置

# cat /etc/ntp.conf |awk '{if($0 !~ /^$/ && $0 !~ /^#/) {print $0}}'

driftfile /var/lib/ntp/drift

restrict default nomodify notrap nopeer noquery

restrict 127.0.0.1

restrict ::1

server 10.0.40.237

restrict 10.0.40.237 nomodify notrap noquery

server 127.0.0.1

fudge 127.0.0.1 stratum 10

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

disable monitor

4,启动ntp

# systemctl start ntpd # systemctl enable ntpd Created symlink from /etc/systemd/system/multi-user.target.wants/ntpd.service to /usr/lib/systemd/system/ntpd.service.

5,查看状态

# ntpq -p remote refid st t when poll reach delay offset jitter ============================================================================== bigserver1 202.112.10.36 13 u 33 64 0 0.000 0.000 0.000 localhost .INIT. 16 l - 64 0 0.000 0.000 0.000 # ntpstat unsynchronised time server re-starting polling server every 8 s

三,修改ulimit

Limits on Number of Files and Processes (ulimit)

It is recommended to raise the ulimit to at least 10,000, but more likely 10,240, because the value is usually expressed in multiples of 1024. Each ColumnFamily has at least one StoreFile, and possibly more than six StoreFiles if the region is under load. The number of open files required depends upon the number of ColumnFamilies and the number of regions. The following is a rough formula for calculating the potential number of open files on a RegionServer.

# vim /etc/security/limits.conf * soft nofile 65535 * hard nofile 65535 * soft nproc 65535 * hard nproc 65535 注释: * 代表针对所有用户 noproc 是代表最大进程数 nofile 是代表最大文件打开数

需要重新登录,或者重新打开ssh客户端连接,永久生效。

四,hbase安装配置

1,下载hbase

# wget http://mirrors.hust.edu.cn/apache/hbase/stable/hbase-1.4.9-bin.tar.gz # tar zxvf hbase-1.4.9-bin.tar.gz # cp -r hbase-1.4.9-bin /bigdata/hbase

2,环境变量

# vim ~/.bashrc export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.191.b12-1.el7_6.x86_64 export HADOOP_HOME=/bigdata/hadoop export SPARK_HOME=/bigdata/spark export HIVE_HOME=/bigdata/hive export ZOOKEEPER_HOME=/bigdata/zookeeper export HBASE_HOME=/home/bigdata/hbase export PATH=$ZOOKEEPER_HOME/bin:$HBASE_HOME/bin:$SPARK_HOME/bin:$HIVE_HOME/bin:/bigdata/hadoop/bin:$SQOOP_HOME/bin:$PATH export CLASSPATH=$JAVA_HOME/lib:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export LD_LIBRARY_PATH=/bigdata/hadoop/lib/native/ # source ~/.bashrc

备注:所有节点,hbase相关的环境变量一样

3,复制hdfs-site.xml到hbase/conf

# ln -s $HADOOP_HOME/etc/hadoop/hdfs-site.xml $HBASE_HOME/conf/hdfs-site.xml

注意:复制$HADOOP_HOME/etc/hadoop/hdfs-site.xml到$HBASE_HOME/conf目录下,这样以保证hdfs与hbase两边一致

4,配置hbase-site.xml

# $HBASE_HOME/conf/hbase-site.xml

<configuration>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>bigserver1,bigserver2,testing</value>

<description>The directory shared by RegionServers.

</description>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/bigdata/zookeeper/data</value>

<description>

Property from ZooKeeper config zoo.cfg. 与 zoo.cfg 中配置的一致

The directory where the snapshot is stored.

</description>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://bigdata1/hbase</value>

<description>The directory shared by RegionServers.

官网多次强调这个目录不要预先创建,hbase会自行创建,否则会做迁移操作,引发错误

</description>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

<description>分布式集群配置,这里要设置为true,如果是单节点的,则设置为false

The mode the cluster will be in. Possible values are

false: standalone and pseudo-distributed setups with managed ZooKeeper

true: fully-distributed with unmanaged ZooKeeper Quorum (see hbase-env.sh)

</description>

</property>

</configuration>

5,配置regionserver文件

# vim $HBASE_HOME/conf/regionservers bigserver1 bigserver2 bigserver3 testing

6,配置 backup-masters 文件(master备用节点)

# vim $HBASE_HOME/conf/backup-masters testing

备注:HBase 支持运行多个 master 节点,因此不会出现单点故障的问题,但只能有一个活动的管理节点(active master),其余为备用节点(backup master),编辑 $HBASE_HOME/conf/backup-masters 文件进行配置备用管理节点的主机名

7,配置 hbase-env.sh 文件

# vim $HBASE_HOME/conf/hbase-env.sh export HBASE_MANAGES_ZK=false //不用hbase内置zookeeper # Configure PermSize. Only needed in JDK7. You can safely remove it for JDK8+ //jdk1.8的话,下面二行加上注释 #export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m -XX:ReservedCodeCacheSize=256m" #export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m -XX:ReservedCodeCacheSize=256m"

8,scp将配置文件同步,hbase集群中的所有机器

9,启动hbase

# start-hbase.sh

五,测试hbase

1,查看各节点,启动进程

bigserver1: 30753 HMaster 22820 NameNode 27380 QuorumPeerMain 30884 HRegionServer 31062 Jps 6567 ResourceManager 1946 JournalNode 23194 DFSZKFailoverController bigserver2: 1235 Kafka 17172 HRegionServer 17352 Jps 4314 NodeManager 4155 DataNode 4237 JournalNode 1119 QuorumPeerMain bigserver3: 10579 HRegionServer 10087 NodeManager 10778 Jps 19788 DataNode testing: 1521 DFSZKFailoverController 1124 NameNode 9508 HMaster 9876 Jps 32293 QuorumPeerMain 9381 HRegionServer 32347 Kafka 1612 ResourceManager 1375 JournalNode

2,创建表

3,查看web页面

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/hadoop/2182.html