elk是elasticsearch,logstash,kibana的缩写,组成了日志收集的服务端。在看这篇文章,要先了解以下二篇文章:

ubuntu elasticsearch,logstash,kibana,filebeat安装配置

一,elk的选择

方案一,用k8s分别安装elasticsearch,logstash,kibana,配置要复杂一点,但是灵活度要高一点

方案二,用已集成好的elk来安装,这也本文采取的方式

[root@bigserver3 elk]# docker search elk NAME DESCRIPTION STARS OFFICIAL AUTOMATED sebp/elk Collect, search and visualise log data with … 1004 [OK] qnib/elk Dockerfile providing ELK services (Elasticse… 108 [OK] willdurand/elk Creating an ELK stack could not be easier. 103 [OK] sebp/elkx Collect, search and visualise log data with … 40 [OK] elkarbackup/elkarbackup ElkarBackup is a free open-source backup sol… 14 [OK] elkozmon/zoonavigator-web This repository is DEPRECATED, use elkozmon/… 13 grubykarol/elk-docker elk docker image (derived from spujadas/elk-… 7 [OK] ...............................................省略................................................

选第一个,星最多

二,制作安装elk配置文件

1,生成namespace配置文件elk-namespace.yaml

[root@bigserver3 elk]# cat elk-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: elk

labels:

name: elk

[root@bigserver3 elk]# kubectl apply -f elk-namespace.yaml //执行

[root@bigserver3 elk]# kubectl get ns //查看namespace

NAME STATUS AGE

default Active 11d

elk Active 7h5m //执行成功

kube-node-lease Active 11d

kube-public Active 11d

kube-system Active 11d

kubernetes-dashboard Active 10d

2,配置elk logstash容器里面配置

[root@bigserver3 elk]# cat elk-logstash-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-config

namespace: elk

data:

02-beats-input.conf: |

input {

beats {

port => 5044

}

}

30-output.conf: |

output {

elasticsearch {

hosts => ["127.0.0.1:9200"]

manage_template => false

index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

}

}

10-syslog.conf: |

filter {

if [type] == "syslog" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

syslog_pri { }

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

11-nginx.conf: |

filter {

if [type] == "nginx-access" {

grok {

match => { "message" => "%{NGINXACCESS}" }

}

}

}

[root@bigserver3 elk]# kubectl create -f elk-logstash-configmap.yaml //创建

configmap/logstash-config created

[root@bigserver3 elk]# kubectl get configmap -n elk //查看

NAME DATA AGE

logstash-config 4 32m

其实有input,output就够了。

3,配置elk-deployment.yaml

[root@bigserver3 elk]# cat elk-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: elk

namespace: elk

labels:

app: elk

spec:

replicas: 1

selector:

matchLabels:

app: elk

template:

metadata:

labels:

app: elk

spec:

nodeSelector:

nodetype: elk

containers:

- name: elk

image: sebp/elk

tty: true

ports:

- containerPort: 5601

- containerPort: 5044

- containerPort: 9200

volumeMounts:

- name: data

mountPath: /var/lib/elasticsearch

- name: logstash-volume

mountPath: /etc/logstash/conf.d

initContainers:

- name: fix-permissions

image: busybox

command: ["sh", "-c", "chmod 777 -R /var/lib/elasticsearch"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /var/lib/elasticsearch

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumes:

- name: data

hostPath:

path: /var/lib/elasticsearch

- name: logstash-volume

configMap:

name: logstash-config

items:

- key: 02-beats-input.conf

path: 02-beats-input.conf

- key: 30-output.conf

path: 30-output.conf

- key: 10-syslog.conf

path: 10-syslog.conf

- key: 11-nginx.conf

path: 11-nginx.conf

[root@bigserver3 elk]# kubectl create --validate -f elk-deployment.yaml

deployment.apps/elk created //创建deployment

解释:

a),namespace: elk,是elk是空间名,上一步创建的

b),nodeSelector:

nodetype: elk,这里的nodetype: elk是设置的label名,创建方法如下:

[root@bigserver3 elk]# kubectl label nodes bigserver2 nodetype=elk node/bigserver2 labeled [root@bigserver3 elk]# kubectl get nodes --show-labels |grep elk //查看

整体的意思是,选择label为elk的节点,启动pod。如果取消该配置,在什么节点启动pod,由k8s自己决定。

如果nodeSelector配置错误,有以下二点表现:

[root@bigserver3 elk]# kubectl get pod -n elk

NAME READY STATUS RESTARTS AGE

elk-86788c944f-jpwr4 0/1 Pending 0 15m

[root@bigserver3 elk]# kubectl describe pods/elk-86788c944f-jpwr4 -n elk

Name: elk-86788c944f-jpwr4

Namespace: elk

Priority: 0

Node:

Labels: app=elk

pod-template-hash=86788c944f

Annotations:

Status: Pending

IP:

IPs:

Controlled By: ReplicaSet/elk-86788c944f

Containers:

elk:

Image: sebp/elk

Ports: 5601/TCP, 5044/TCP, 9200/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-v7xtf (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

default-token-v7xtf:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-v7xtf

Optional: false

QoS Class: BestEffort

Node-Selectors: type=elk

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling default-scheduler 0/3 nodes are available: 3 node(s) didn't match node selector.

Warning FailedScheduling default-scheduler 0/3 nodes are available: 3 node(s) didn't match node selector.

pod的状态是pending状态。didn't match node selector,没有匹配到任何节点来启动pod。

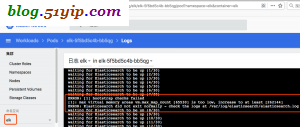

c),command: ["sysctl", "-w", "vm.max_map_count=262144"],如果这步不设置,也是启动不了的。报以下错误

ERROR: [1] bootstrap checks failed

[1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

ERROR: Elasticsearch did not exit normally - check the logs at /var/log/elasticsearch/elasticsearch.log

k8s的deployment的配置非常多,上面几个配置是要重点注意的。

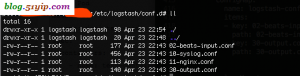

d),将configmap转化成容器内部的配置文件,下面是转化前后对比

4,配置elk-elastic.yaml

[root@bigserver3 elk]# cat elk-elastic.yaml

apiVersion: v1

kind: Service

metadata:

name: elk-elastic

namespace: elk

spec:

type: ClusterIP

ports:

- port: 9200

targetPort: 9200

selector:

app: elk

[root@bigserver3 elk]# kubectl create -f elk-elastic.yaml

service/elk-elastic created

注释:type: ClusterIP

ClusterIP:通过集群的内部 IP 暴露服务,选择该值,服务只能够在集群内部可以访问,这也是默认的 ServiceType。

NodePort:通过每个 Node 上的 IP 和静态端口(NodePort)暴露服务。NodePort 服务会路由到 ClusterIP 服务,这个 ClusterIP 服务会自动创建。通过请求 <NodeIP>:<NodePort>,可以从集群的外部访问一个 NodePort 服务。

LoadBalancer:使用云提供商的负载局衡器,可以向外部暴露服务。外部的负载均衡器可以路由到 NodePort 服务和 ClusterIP 服务。

ExternalName:通过返回 CNAME 和它的值,可以将服务映射到 externalName 字段的内容(例如, foo.bar.example.com)。 没有任何类型代理被创建。

到底是使用ClusterIP,NodePort或者其他,根据实际情况来。如果需要node间能访问,就需要NodePort。

5,配置elk-kibana.yaml

[root@bigserver3 elk]# cat elk-kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: elk-kibana

namespace: elk

spec:

type: NodePort

ports:

- port: 5601

nodePort: 30009

selector:

app: elk

[root@bigserver3 elk]# kubectl create -f elk-kibana.yaml

service/elk-kibana created

6,配置elk-logstash.yaml

[root@bigserver3 elk]# cat elk-logstash.yaml

apiVersion: v1

kind: Service

metadata:

name: elk-logstash

namespace: elk

spec:

type: ClusterIP

ports:

- port: 5044

targetPort: 5044

selector:

app: elk

[root@bigserver3 elk]# kubectl create -f elk-logstash.yaml

service/elk-logstash created

在安装过程中,会牵扯到svc,pod,delpoyment,label的删除与重建,就不在这篇文中说了,后面会单独写一篇,不然就太长了。

三,检查elk是否安装成功

1,pod是否running

[root@bigserver3 elk]# kubectl get pod,svc -n elk NAME READY STATUS RESTARTS AGE pod/elk-ddc4c865b-859ks 1/1 Running 0 3h23m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/elk-elastic ClusterIP 10.1.40.47 <none> 9200/TCP 3h10m service/elk-kibana NodePort 10.1.7.179 <none> 5601:30009/TCP 3h8m service/elk-logstash ClusterIP 10.1.166.240 <none> 5044/TCP 3h8m

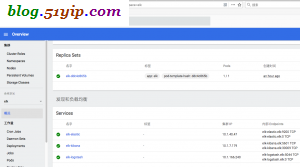

2,k8s Dashboard,中是状态是否健康

3,ssh到pod中,es,logstash进程是否启动

[root@bigserver3 elk]# kubectl -n elk exec -it elk-ddc4c865b-859ks -- /bin/bash root@elk-ddc4c865b-859ks:/# ps PID TTY TIME CMD 325 pts/1 00:00:00 bash 339 pts/1 00:00:00 ps root@elk-ddc4c865b-859ks:/# ps aux |grep elasticsearch root@elk-ddc4c865b-859ks:/# ps aux |grep logstash

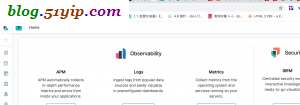

4,kibana是否可以访问

如果以上都没有什么问题,说明安装成功了。后面会讲一讲,客户端filebeat的安装和使用。

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/cloud/2408.html