elasticsearch自带有中文分词,但是特别的傻,后面会做对比,在这里推荐analysis ik,用es来做全文检索工具的人员80%-90%会用这个中文分词工具,一直在更新维护。

1,elasticsearch分词器(analyzers)说明

elasticsearch中,内置了很多分词器(analyzers),例如standard (标准分词器)、english (英文分词)和chinese (中文分词)。

其中standard 就是无脑的一个一个词(汉字)切分,所以适用范围广,但是精准度低;

english 对英文更加智能,可以识别单数负数,大小写,过滤stopwords(例如“the”这个词)等;

2,安装maven

$ brew search maven //mac # apt-get install maven //ubuntu # yum install maven //centos or redhat $ mvn -v Apache Maven 3.5.0 (ff8f5e7444045639af65f6095c62210b5713f426; 2017-04-04T03:39:06+08:00) Maven home: /usr/local/Cellar/maven/3.5.0/libexec Java version: 1.8.0_112, vendor: Oracle Corporation Java home: /Library/Java/JavaVirtualMachines/jdk1.8.0_112.jdk/Contents/Home/jre Default locale: zh_CN, platform encoding: UTF-8 OS name: "mac os x", version: "10.12.6", arch: "x86_64", family: "mac"

3,下载analysis ik插件

$ git clone https://github.com/medcl/elasticsearch-analysis-ik.git $ cd elasticsearch-analysis-ik $ git branch -a //根据不同的es版本,进行git checkout * master //主分支是6.2.3的 remotes/origin/2.x remotes/origin/5.3.x remotes/origin/5.x remotes/origin/6.1.x remotes/origin/HEAD -> origin/master remotes/origin/arkxu-master remotes/origin/master remotes/origin/revert-80-patch-1 remotes/origin/rm remotes/origin/wyhw-ik_lucene4 $ mvn package //打包 $ ll target/releases/ total 4400 drwxr-xr-x 3 zhangying staff 102 4 24 13:46 ./ drwxr-xr-x 11 zhangying staff 374 4 24 13:32 ../ -rw-r--r-- 1 zhangying staff 4501993 4 24 13:32 elasticsearch-analysis-ik-6.2.3.zip //在releases目录会生成一个zip文件,将其解压 $ cd target/releases/ && unzip elasticsearch-analysis-ik-6.2.3.zip

4,安装analysis ik插件

$ brew info elasticsearch elasticsearch: stable 6.2.3, HEAD Distributed search & analytics engine https://www.elastic.co/products/elasticsearch /usr/local/Cellar/elasticsearch/6.2.3 (112 files, 30.8MB) * Built from source on 2018-04-24 at 14:17:01 From: https://github.com/Homebrew/homebrew-core/blob/master/Formula/elasticsearch.rb ==> Requirements Required: java = 1.8 ✔ ==> Options --HEAD Install HEAD version ==> Caveats Data: /usr/local/var/lib/elasticsearch/elasticsearch_zhangying/ Logs: /usr/local/var/log/elasticsearch/elasticsearch_zhangying.log Plugins: /usr/local/var/elasticsearch/plugins/ //插件路径 Config: /usr/local/etc/elasticsearch/ To have launchd start elasticsearch now and restart at login: brew services start elasticsearch Or, if you don't want/need a background service you can just run: elasticsearch //将刚才解压出来目录,移动plugins下面 $ mv elasticsearch /usr/local/var/elasticsearch/plugins/ik

在这里要注意,不要在elasticsearch.yml文件中加index:analysis:analyzer:,老版支持,但是es6.x尝试了几种办法都没有成功,会报以下错误:

node settings must not contain any index level settings

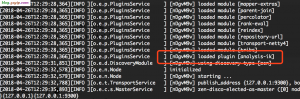

5,启动elasticsearch

$ elasticsearch //启动

如果出现以下内容就说成功了

6,测试中文分词

//创建索引

$ curl -XPUT "http://127.0.0.1:9200/tank?pretty"

//创建mapping

$ curl -XPOST "http://127.0.0.1:9200/tank/chinese/_mapping?pretty" -H "Content-Type: application/json" -d '

{

"chinese": {

"_all":{

"enabled":false //禁止全字段全文检索

},

"properties": {

"id": {

"type": "integer"

},

"username": {

"type": "text",

"analyzer": "ik_max_word" //精确分词模式

},

"description": {

"type": "text",

"analyzer": "ik_max_word"

}

}

}

}

'

//插入二条数据

$ curl -XPOST "http://127.0.0.1:9200/tank/chinese/?pretty" -H "Content-Type: application/json" -d '

{

"id" : 1,

"username" : "中国高铁速度很快",

"description" : "如果要修改一个字段的类型"

}'

$ curl -XPOST "http://127.0.0.1:9200/tank/chinese/?pretty" -H "Content-Type: application/json" -d '

{

"id" : 2,

"username" : "动车和复兴号,都属于高铁",

"description" : "现在想要修改为string类型"

}'

//搜索

$ curl -XPOST "http://127.0.0.1:9200/tank/chinese/_search?pretty" -H "Content-Type: application/json" -d '

> {

> "query": {

> "match": {

> "username": "中国高铁"

> }

> }

> }

> '

{

"took" : 188,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 2,

"max_score" : 0.8630463,

"hits" : [

{

"_index" : "tank",

"_type" : "chinese",

"_id" : "oJfx_2IBVvjz0l6TkJ6K",

"_score" : 0.8630463, //权重越高,匹配度越大

"_source" : {

"id" : 1,

"username" : "中国高铁速度很快",

"description" : "如果要修改一个字段的类型"

}

},

{

"_index" : "tank",

"_type" : "chinese",

"_id" : "oZfx_2IBVvjz0l6Tpp64",

"_score" : 0.5753642,

"_source" : {

"id" : 2,

"username" : "动车和复兴号,都属于高铁",

"description" : "现在想要修改为string类型"

}

}

]

}

}

7,elasticsearch内置中文分词和ik分词对比

$ curl -XPOST 'http://localhost:9200/tank/_analyze?pretty=true' -H 'Content-Type: application/json' -d '

> {

> "analyzer":"ik_smart", //简短分词

> "text":"感叹号"

> }'

{

"tokens" : [

{

"token" : "感叹号",

"start_offset" : 0,

"end_offset" : 3,

"type" : "CN_WORD",

"position" : 0

}

]

}

$ curl -XPOST 'http://localhost:9200/tank/_analyze?pretty=true' -H 'Content-Type: application/json' -d '

> {

> "analyzer":"standard", //es自带分词

> "text":"感叹号"

> }'

{

"tokens" : [

{

"token" : "感",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<IDEOGRAPHIC>",

"position" : 0

},

{

"token" : "叹",

"start_offset" : 1,

"end_offset" : 2,

"type" : "<IDEOGRAPHIC>",

"position" : 1

},

{

"token" : "号",

"start_offset" : 2,

"end_offset" : 3,

"type" : "<IDEOGRAPHIC>",

"position" : 2

}

]

}

$ curl -XPOST 'http://localhost:9200/tank/_analyze?pretty=true' -H 'Content-Type: application/json' -d '

> {

> "analyzer":"ik_max_word", //精确分词

> "text":"感叹号"

> }'

{

"tokens" : [

{

"token" : "感叹号",

"start_offset" : 0,

"end_offset" : 3,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "感叹",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "叹号",

"start_offset" : 1,

"end_offset" : 3,

"type" : "CN_WORD",

"position" : 2

}

]

}

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/server/1892.html