安装IDEA就不说了,也很简单。在这里推荐做java和scala的人使用idea,真的比eclipse好用。

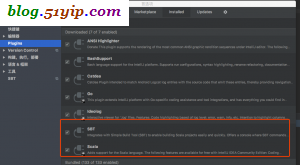

1,idea安装scala和sbt插件

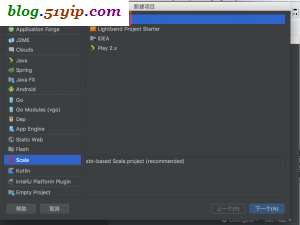

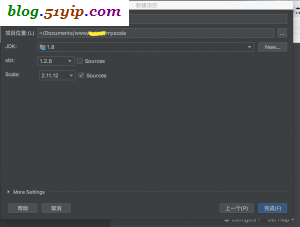

2,创建scala项目

3,修改build.sbt,加载spark,spark-sql

name := "myscala" version := "0.1" scalaVersion := "2.11.12" libraryDependencies ++= Seq( "org.apache.spark" % "spark-core_2.11" % "2.3.0", "org.apache.spark" % "spark-sql_2.11" % "2.3.0" )

4,创建测试文件

$ mkdir input $ echo "test my test tank test " > input/testword.txt $ pwd /Users/zhangying/Documents/www/myscala

5,创建scala object文件

import org.apache.spark.SparkContext

import org.apache.spark.SparkConf

object WordCount {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("WordCount").setMaster("local")

val sc = new SparkContext(conf)

val input = sc.textFile("input")

val words = input.flatMap(line => line.split(" "))

val counts = words.map(word => (word, 1)).reduceByKey(_+_)

counts.saveAsTextFile("output")

}

}

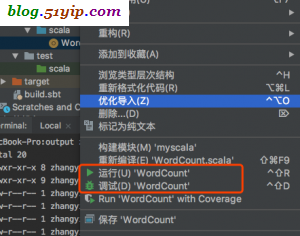

6,运行scala object程序

MacBook-Pro:output zhangying$ ll total 20 drwxr-xr-x 8 zhangying staff 272 Aug 3 16:48 ./ drwxr-xr-x 9 zhangying staff 306 Aug 3 16:48 ../ -rw-r--r-- 1 zhangying staff 8 Aug 3 16:48 ._SUCCESS.crc -rw-r--r-- 1 zhangying staff 12 Aug 3 16:48 .part-00000.crc -rw-r--r-- 1 zhangying staff 12 Aug 3 16:48 .part-00001.crc -rw-r--r-- 1 zhangying staff 0 Aug 3 16:48 _SUCCESS -rw-r--r-- 1 zhangying staff 45 Aug 3 16:48 part-00000 -rw-r--r-- 1 zhangying staff 21 Aug 3 16:48 part-00001

转载请注明

作者:海底苍鹰

地址:http://blog.51yip.com/hadoop/2160.html